# Flowpipe: The cloud scripting engine

> Automation and workflow to connect your clouds to the people, systems and data that matter.

By Turbot Team

Published: 2023-12-13

## Introducing Flowpipe

Flowpipe is an open-source cloud scripting engine from [Turbot](https://turbot.com) that enables you to:

**Orchestrate your cloud**. Build simple steps into complex workflows. Run and test locally. Compose solutions across clouds using open source mods.

**Connect people and tools**. Connect your cloud data to people and systems using email, chat & APIs. Workflow steps can even run containers, custom functions, and more.

**Respond to events**. Run workflows manually or on a schedule. Trigger pipelines from webhooks or changes in data.

**Use code, not clicks**. Build and deploy DevOps workflows like infrastructure. Code in HCL and deploy from version control.

## Pipelines as code!

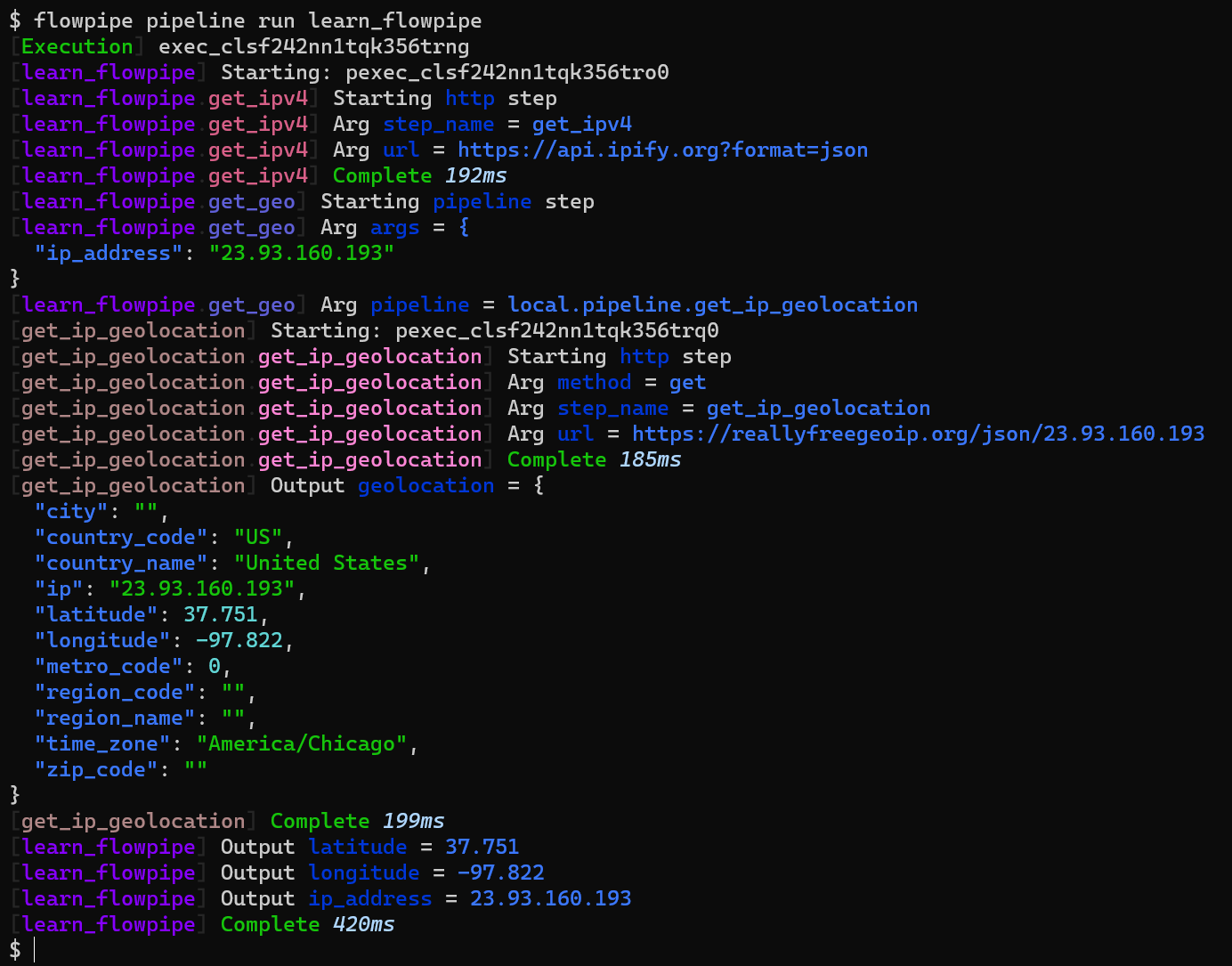

Here's a simple example: a two-step pipeline to get your IP address and location:

```hcl

pipeline "learn_flowpipe" {

# Simple HTTP step to get data from a URL

step "http" "get_ipv4" {

url = "https://api.ipify.org?format=json"

}

# Run a nested pipeline from the reallyfreegeoip mod

step "pipeline" "get_geo" {

pipeline = reallyfreegeoip.pipeline.get_ip_geolocation

args = {

# Automatic dependency resolution determines step order

ip_address = step.http.get_ipv4.response_body.ip

}

}

output "ip_address" {

value = step.http.get_ipv4.response_body.ip

}

output "latitude" {

value = step.pipeline.get_geo.output.geolocation.latitude

}

output "longitude" {

value = step.pipeline.get_geo.output.geolocation.longitude

}

}

```

Pipelines are defined and [run](/docs/run/pipelines) on your local machine:

```sh

$ flowpipe pipeline run learn_flowpipe

```

## Step into cloud scripting

DevOps professionals use a vast repertoire of tools, scripts and processes to get through the day. Flowpipe's step primitives embrace all of them:

| Type | Description |

| --- | --- |

| [container](/docs/flowpipe-hcl/step/container) | Run a Docker container. |

| [email](/docs/flowpipe-hcl/step/email) | Send an email. |

| [function](/docs/flowpipe-hcl/step/function) | Run an AWS Lambda-compatible function. |

| [http](/docs/flowpipe-hcl/step/http) | Make an HTTP request. |

| [pipeline](/docs/flowpipe-hcl/step/pipeline) | Run another Flowpipe pipeline. |

| [query](/docs/flowpipe-hcl/step/query) | Run a SQL query, works great with [Steampipe](https://steampipe.io)! |

| [sleep](/docs/flowpipe-hcl/step/sleep) | Wait for a defined time period. |

| [transform](/docs/flowpipe-hcl/step/transform) | Use HCL functions to transform data . |

For example, here is a [container step](/docs/flowpipe-hcl/step/container) to run the AWS CLI:

```hcl

pipeline "another_pipe" {

step "container" "aws_s3_ls" {

image = "public.ecr.aws/aws-cli/aws-cli"

cmd = ["s3", "ls"]

env = credentials.aws["my_profile"].env

}

output "buckets" {

value = step.container.aws_s3_ls.stdout

}

}

```

Don't want to run a container? Use a mod or a query step or a function step. There's more than one way to do it, choose the method that best enables you to orchestrate your cloud and coordinate your team.

## Keep control, in good times and bad

Pipelines may start simple, but we know that things get complicated at cloud scale. Flowpipe has you covered with:

- [timeouts, retries and error handling](/docs/build/write-pipelines/errors)

- [conditional logic](/docs/build/write-pipelines/conditionals)

- [iteration](/docs/build/write-pipelines/iteration) and [parallelism](/docs/build/write-pipelines/control-flow#control-flow)

For example, `for_each` will run a step multiple times in parallel for a collection:

```hcl

step "http" "add_a_user" {

for_each = ["Jerry", "Elaine", "Newman"]

url = "https://myapi.local/api/v1/user"

method = "post"

request_body = jsonencode({

user_name = "${each.value}"

})

}

```

And `retry` allows you to [retry the step](/docs/build/write-pipelines/errors#retry) when an error occurs:

```hcl

step "http" "my_request" {

url = "https://myapi.local/subscribe"

method = "post"

body = jsonencode({

name = param.subscriber

})

retry {

max_attempts = 5

strategy = "exponential"

min_interval = 100

max_interval = 10000

}

}

```

## Composable Mods and the Flowpipe Hub

Flowpipe mods are open-source composable pipelines so you can remix and reuse your code — or build on the great work of the community. The [Flowpipe Hub](https://hub.flowpipe.io) is a searchable directory of mods to discover and use. The source code for mods is [available on GitHub](https://github.com/topics/flowpipe-mod) if you'd like to learn or contribute.

[Flowpipe library mods](https://hub.flowpipe.io/?type=library) make it easy to work with common services including AWS, GitHub, Jira, Slack, Teams, Zendesk ... and many more!

[Flowpipe sample mods](https://hub.flowpipe.io/?type=sample) are ready-to-run samples that demonstrate patterns and use of various library mods.

[Creating your own mod](/docs/build/#initializing-a-mod) is easy:

```sh

mkdir my_mod

cd my_mod

flowpipe mod init

```

[Install the AWS mod](/docs/build/mod-dependencies) as a dependency:

```sh

flowpipe mod install github.com/turbot/flowpipe-mod-aws

```

[Use the dependency](/docs/build/write-pipelines) in a pipeline step:

```sh

vi my_pipeline.fp

```

```hcl

pipeline "my_pipeline" {

step "pipeline" "describe_ec2_instances" {

pipeline = aws.pipeline.describe_ec2_instances

args = {

instance_type = "t2.micro"

region = "us-east-1"

}

}

}

```

and then run your pipeline!

## Schedules, Events and Triggers

DevOps is filled with routine work, which Flowpipe is happy to do on a schedule. Just setup a [schedule trigger](/docs/flowpipe-hcl/trigger/schedule) to run a pipeline at regular intervals:

```hcl

trigger "schedule" "daily_3pm" {

schedule = "* 15 * * *"

pipeline = pipeline.daily_task

}

```

Events are critical to the world of cloud scripting — we need to respond immediately to code pushes, infrastructure change, Slack messages, etc. So Flowpipe has a [http trigger](/docs/flowpipe-hcl/trigger/http) to handle incoming webhooks and run a pipeline:

```hcl

trigger "http" "my_webhook" {

pipeline = pipeline.my_pipeline

args = {

event = self.request_body

}

}

```

## Cloud scripting is the sweet spot for DevOps automation

Flowpipe is built for DevOps teams. Express pipelines, steps, and triggers in HCL — the familiar DevOps language. Compose pipelines using mods, and mix in SQL, Python functions, or containers as needed. Develop, test and run it all on your local machine — no deployment surprises or long debug cycles. Then schedule the pipelines or respond to events in real-time.

To get started with Flowpipe: [download](/downloads) the tool, follow the [tutorial](/docs), peruse the [library mods](https://hub.flowpipe.io/?type=library), and check out the [samples](https://hub.flowpipe.io/?type=sample). Then [let us know](https://turbot.com/community/join) how it goes!